From Proof to Practice: Are Control Systems ready for ML?

We continue our search for the answers to increasing ML use in the day-to-day operations of accelerators and other Big Physics Machines.

This time around, we asked the control systems community at the recent ICALEPCS in Chicago a simple question:

ML is here: Are Control Systems ready?

Seventy-five experts responded, drawn mainly from accelerator and large-facility control teams.

Read on to see what they have to say about Control Systems readiness for Machine Learning.

This blog is the continuation of our exploration of Machine Learning usage inside the accelerator community.

Read more about our findings from IPAC on ML usage in accelerators here.

A community in transition

The numbers indicate that the Control Systems community is undergoing a transition.

At the respondent level (N = 75), answers are split almost evenly: 48% report that their control systems are ready for machine learning, while 52% say they are not ready.

This balance confirms that the community is shifting from exploration to implementation: many facilities now have credible ML footholds, but just as many still wrestle with foundational issues such as data alignment and legacy architectures.

Looking back, this also confirms what we established in our first blog: there is a lot of pioneering effort, but it remains fragmented.

Read more about our findings from IPAC on ML usage in accelerators here.

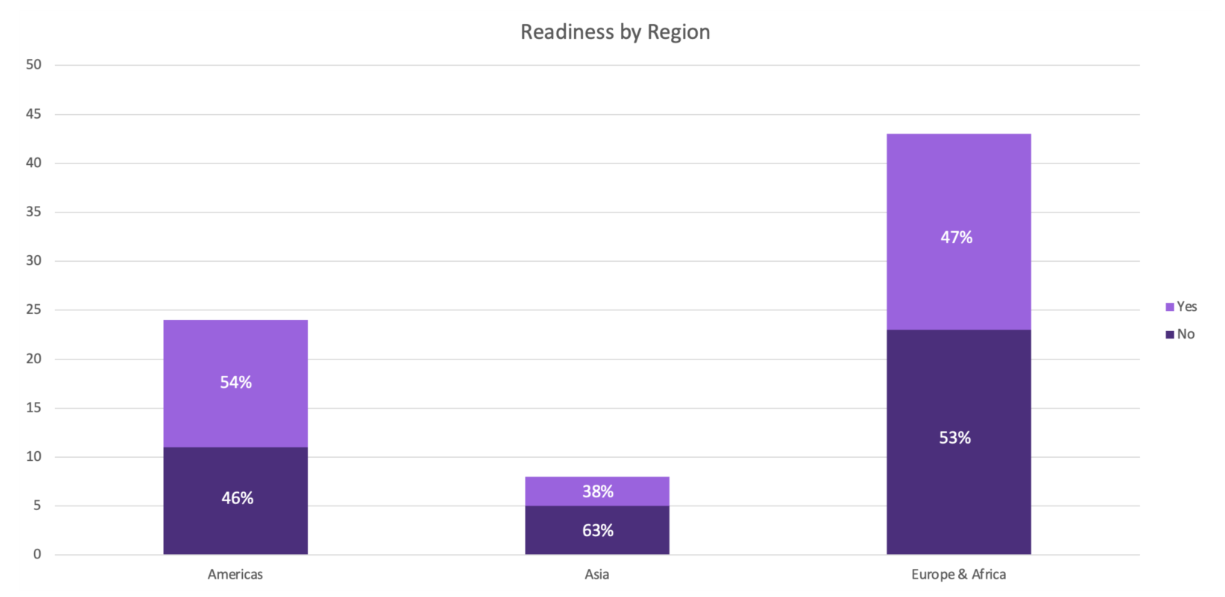

Regional contrasts

When grouped into three macro-regions, readiness levels diverge:

Figure 1: Readiness by region (Americas, Asia, Europe & Africa)

The Americas lead in operational ML readiness, with several facilities already integrating ML into beam optimisation and diagnostics.

Europe + Africa show strong research activity but slower control-room adoption, while Asia reports the highest share of “not ready” responses, reflecting both newer facilities and fragmented legacy stacks.

Comparing these data to the IPAC’25 poll, we see that the control systems community is more reserved on ML-readiness, but it does not point us towards a definite answer to the question:

Is control systems community more reserved about ML-readiness because of the day-to-day realities of integration and guardrails, or because the use cases they see are earlier in the lifecycle?

While accelerator specialists at IPAC’25 reported broader ML use, the control-system engineers at ICALEPCS 2025 highlight the gap between algorithmic promise and infrastructure reality.

Institutional readiness

The organisational split is clear: public research institutions account for most respondents and most “no” votes (54%), while private organisations, though fewer, report proportionally higher readiness (67%), often tied to cleaner data architectures and shorter deployment cycles.

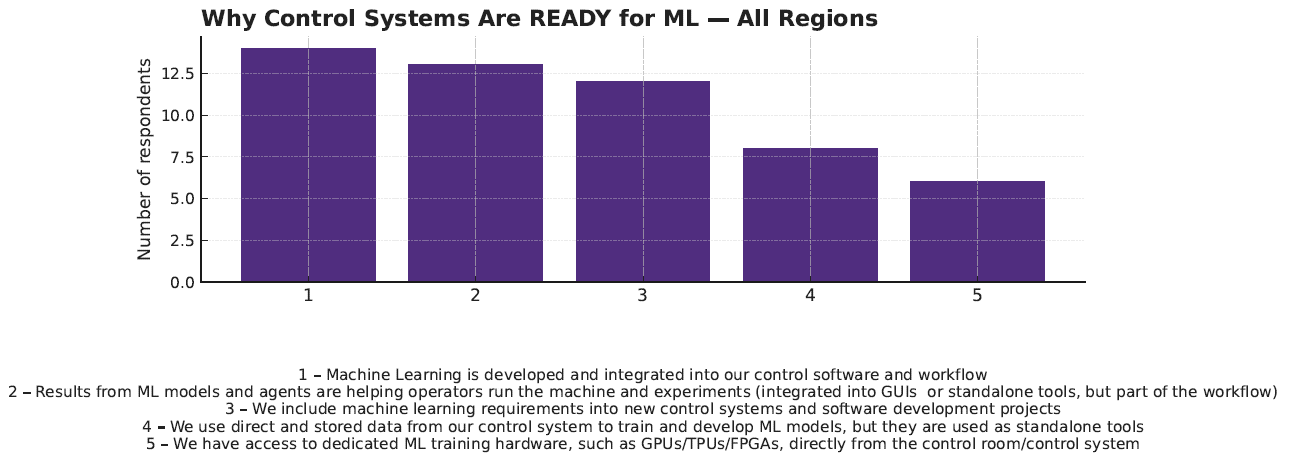

Why sites are (or aren’t) ready

Respondents who marked “ready” most often pointed to:

- Models integrated into existing workflows

- Operator visibility of ML outputs

- ML requirements are included into new projects

- Access to computational services close to the machine

While accelerator specialists at IPAC’25 reported broader ML use, the control-system engineers at ICALEPCS 2025 highlight the gap between algorithmic promise and infrastructure reality.

Institutional readiness

The organisational split is clear: public research institutions account for most respondents and most “no” votes (54%), while private organisations, though fewer, report proportionally higher readiness (67%), often tied to cleaner data architectures and shorter deployment cycles.

Why sites are (or aren’t) ready

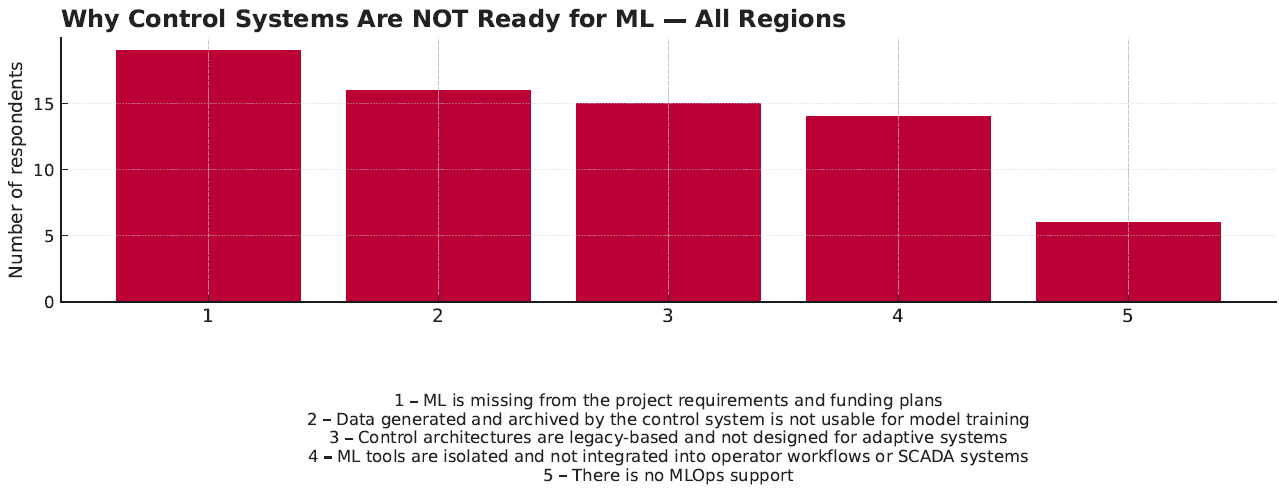

Those marking “not ready” cited recurring themes:

- ML is missing from the project requirements

- Legacy architectures are unsuited for adaptive control

- Data archives are missing raw, time-aligned signals

- ML tools isolated from SCADA/operator GUIs

- Lack of organisational glue: MLOps, approvals, and versioning

Regional nuances in the reasons

Each region’s story differs slightly:

- Americas: Successes cluster around integration: in-house models connected to live workflows.

- Europe + Africa: Respondents stress infrastructure hurdles and data cleanliness.

- Asia: The primary barriers remain legacy controls and a skills gap, although several reports pilot-stage models in diagnostics.

Across all groups, the pattern is the same: ML progresses fastest where operators, control engineers, and data scientists work as one team.

This indicates that the most valuable breakthroughs are cultural, rather than algorithmic, by bringing operators, control engineers, and ML specialists together in the same design reviews, with shared dashboards and a common purpose.

This usually results in fewer hero scripts and more reusable Control Systems components, regardless of staffing changes in teams.

The community is past debating whether ML can help in controlling Big Physics machines.

The primary challenge now is getting the stack and practices right so that ML models can transition from promising demos to daily operations, adhering to a lifecycle development discipline.

This will enable the ML advantages to add up: fewer aborted runs, quicker startups, steadier beams, and lower energy footprints.

The future is bright.

The future is a dependable ML co‑pilot on the panel.

ICALEPCS book of abstracts

The ICALEPCS 2025 Book of Abstracts strongly supports the findings of the survey. Read the book of abstracts here

Most published cases fall into three clusters that match where respondents said ML is most applicable:

- Black-box optimisation for setup and tuning.

LCLS reports “leveraging Bayesian optimization with on-the-fly machine learning … to automatically optimise the beam quality and streamline experiment setup.”SLAC’s Xopt/Badger platform generalises this approach across multiple labs, a sign that teams are moving from one-off notebooks to reusable stacks. - In-the-loop control and stabilisation.

NSLS-II presents a model-based reinforcement learning framework that “stabilises the beam position … down to ~1 μm”. PSI/Transmutex demonstrate the “first practical ML-based beam control in a high-power cyclotron” with a 12-day operational test, improving extraction efficiency and reducing operator workload.In both cases, ML runs inside the feedback path, not as an offline analysis tool. - Plant-level optimisation and infrastructure.

CERN describes a neural-network MPC for HVAC aiming for reproducible energy savings while maintaining stability constraints, evidence that ML’s ROI extends beyond beam physics to facility systems.

A fourth, enabling theme is infrastructure readiness: digital-twin workflows used for emittance and phase-space tuning, and data platforms that standardise high-rate acquisition, archiving and analysis.

These are precisely the “plumbing” elements our respondents linked to ML-readiness.

What the abstracts tell us

- Integration works.

Deployments that succeed run inside feedback loops and surface outputs in operator UIs, mirroring respondents who marked “ready” because ML was wired into existing workflows. - Data and compute pathways decide outcomes.

Successful cases rely on fast, standardised access to time-aligned data or digital twins, reinforcing our “data bottleneck” message. - Application focus is consistent.

The abstracts concentrate on tuning, orbit/trajectory control and phase-space shaping; ML is rare in protection layers, consistent with barriers such as legacy architectures and guardrails. - Regional flavour matches the poll.

Cases skew toward the Americas and Europe (SLAC, NSLS-II, LANSCE, PSI, CERN, INFN), with Asia featuring more simulation-heavy work — broadly matching our regional readiness split.

Read the book of abstracts here

Explore our first blog on future-proofing accelerator operations with ML.

Would you like to receive anonymized, raw data of the survey?

About the author

David Pahor is a physicist who exchanged software development for technical writing and now works in Cosylab's Marketing, where he mints content. In his free time, he writes short fiction and waits on two bull terriers.