How an FPGA-based AI can guide a vehicle

What is DAIS – Distributed Artificial Intelligent Systems?

What is DAIS – Distributed Artificial Intelligent Systems?

The DAIS project is a pan-European endeavour and aims to develop advanced processing solutions based on edge AI software and innovative hardware components.

The objective of DAIS is to research problems of running existing algorithms on vastly distributed edge devices in IoT and find solutions for them. DAIS features a multitude of industry-driven use cases embedded into the application domains, such as Digital Industry, Digital Life, and Smart Mobility.

DAIS receives its funding from the Key Digital Technologies Joint Undertaking (KDT JU) programme, which is itself supported by the European Union’s Horizon 2020 re-search and innovation program.

Why is Edge Computing the Next New Thing?

Edge IoT devices are a type of device capable of performing some level of data processing and analysis at the edge of a network rather than relying on a centralised server or cloud-based system to do so.

Edge computing brings smart resources closer to the user while keeping private and sensitive data on the IoT device. Additionally, edge computing lowers network latency and bandwidth demands, increases energy efficiency, and is vastly more scalable than cloud services in general. On the other hand, recent advances in machine learning (ML) models and big data have propelled artificial intelligence applications into just about every data collection and processing domain. In the latter, real-time and safety-critical applications demand quick decisions based on processing giga-bytes of data per second which cannot be streamed quickly enough over the inter-net.

The Use Case for Automated Guided Vehicles and AI

One such implementation is using sensors and cameras mounted on vehicles to enable autonomous driving, which is also one of the six DAIS demonstrators for the Digital Industry – the “AI-Driven AGV for Obstacle Detection and Avoidance” use case.

Namely, automated guided vehicles are robotic, self-driving vehicles that use data from various sensors to navigate and transport materials within a factory or warehouse facility. These vehicles have been around for decades, but recent technological advances have made them more efficient and cost-effective.

Many companies already operate fleets of automated guided vehicles that manoeuvre using RFID tags and floor-mounted magnetic tape. A recurring problem with this type of AGV transport is the possibility of collisions with people or objects left by incautious workers in the path of the automated guided vehicles. The latter introduces production delays and forces the AGV operator to remove the obstacles from the vehicles’ pathways manually.

The Technology Partners in the DAIS Project

Two companies and one research facility – Cosylab, TPV and Jožef Stefan Institute – have joined forces in the “AI-Driven AGV for Obstacle Detection and Avoidance” use case. They are developing a smart solution that will be able to overcome the men-tioned challenges by combining LiDAR (Light Detection And Ranging) and vision data to form a representation of the environment. Using this information, the automated guided vehicle will be able to depart from the magnetic tape on its regular path, circumvent the obstacle, and return to the magnetic tape as soon as possible.

Challenges and Goals for an AI-Driven Automated Guided Vehicle

The research challenges in the “AI-Driven AGV for Obstacle Detection and Avoidance” use case are twofold. The first is the development of algorithms to detect and represent the environment properly. The factory floor can be a very unpredictable and dynamic environment, with people and machines moving constantly. The second challenge is to support these algorithms at the edge device with limited computational and energy resources.

“AI-Driven AGV for Obstacle Detection and Avoidance” has two main goals:

- to form a representation of the environment by using LiDAR and camera data, and detect an obstacle on the AGV’s path;

- based on the environment, detect potential obstacles, if need be, circumvent them, and return the AGV to its regular path as soon as possible.

The project’s deliverable will be an AI-driven automated guided vehicle based on the TPV company’s chassis, autonomously dealing with unexpected obstacles in its path. The use case will be implemented using machine learning and avoidance algorithms to guide the AGV around factory or warehouse obstacles.

Top-level System Description

Each of the three partners had a specific role in fulfilling in the “AI-Driven AGV for Obstacle Detection and Avoidance” use case.

TPV provided the complete mechanical chassis of the AGV, including:

- the LiDAR unit;

- the magnetic strip sensor; and

- the NFC tag scanner;

Cosylab prepared the following:

- hardware for the computing platform;

- middleware, including custom firmware and operating system for a System on a Chip (SoC) development board (see below for a detailed description;

- the camera and its interface to the system;

IJS trained a custom Machine Learning (ML) model for detecting and avoiding obstacles based on the open-source framework and stack of tools in the Robot Operating System (ROS), and implementing:

- auto-location using SLAM (Simultaneous Localisation and Mapping) algorithms;

- navigation – route planning; based on metric maps;

- managing object avoidance and bypassing;

How Cosylab Combined its FPGA and Software Skills to Develop Custom Firmware

The chassis of the AGV is the foundation for all the other system components of the “AI-Driven AGV for Obstacle Detection and Avoidance” use case. Apart from power, the automated guided vehicle also provides connectivity to its built-in programmable logic controller (PLC) that controls movement and gathers sensor data.

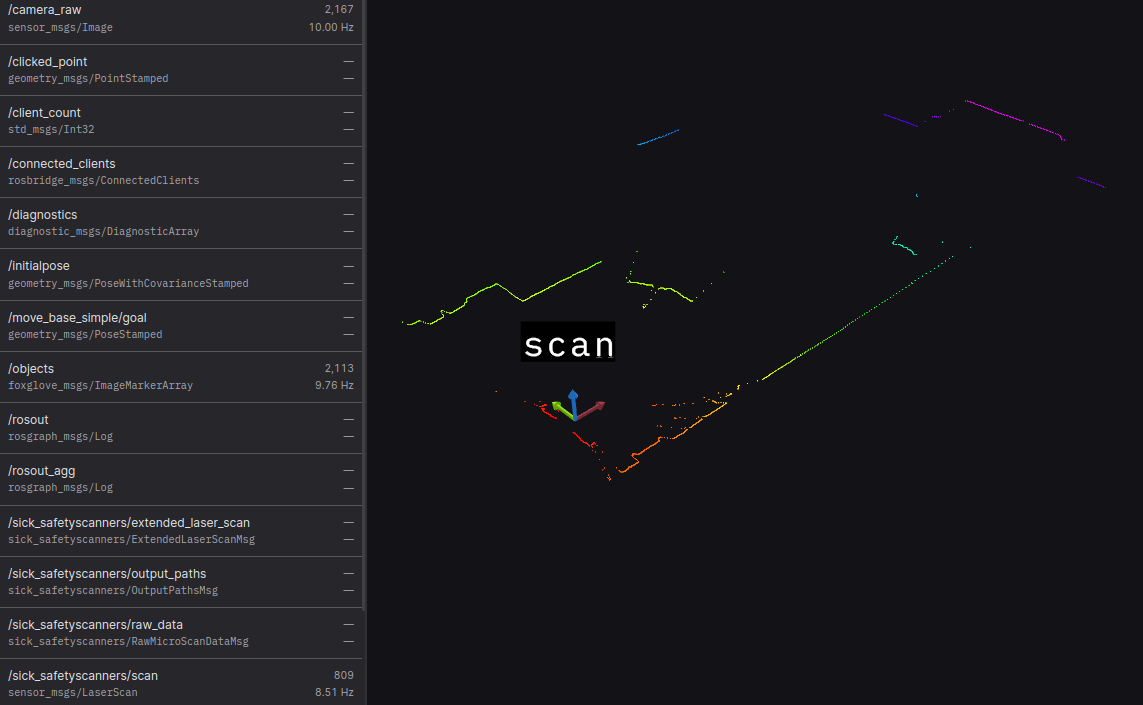

The data is exchanged via a Controller Area Network (CAN) bus using the CANOpen protocol. The video camera used for object detection, which is attached to the front of the AGV case, is connected to the Xilinx ZCU104 development board (“the ZCU104“) through the USB interface.

The LiDAR unit is mounted below the camera, at the front of the AGV, and communicates with ZCU104 over the ethernet interface. The ZCU104 can be connected to the development computer via built-in ethernet or a USB Wi-Fi card.

The principal hardware for the control system is the Xilinx ZCU104 development board, based on the Xilinx UltraScale+ MPSoC (MultiProcessor System on a Chip), which includes both the FPGA circuity and a general-purpose CPU with four main ARM Cortex-A cores. The hardware platform runs the operating system and custom firmware developed by Cosylab.

The firmware includes primary input/output (I/O) connectivity on the development board, while the FPGA integrates machine learning processors — DPUs (Deep Learning Processors). With this, neural network processing is offloaded from the main CPU to the DPUs that run custom-compiled neural network models.

Cosylab created a custom version of the Petalinux operating system, which includes application packages, libraries, development tools and configuration files.

The Linux image also includes a development installation of the Robot Operating System 1 (ROS1) Noetic.

Main Requirements for the “AI-Driven AGV for Obstacle Detection and Avoidance” project

- Sensor data collection;

- AGV localisation;

- Obstacle identification;

- Obstacle detection;

- Obstacle classification;

- Obstacle localisation;

- Sensor data fusion;

- Camera and LIDAR sensor interface;

- Steering control interface;

- AI algorithm running on FPGA;

- Obstacle avoidance;

- Central Control System (CSS) access;

- AGV AI electronics platform;

- Embedded platform operating system and firmware;

The Future of Automated Guided Vehicles

The future of AGV and IoT advancement holds promise, with many experts predicting a continued increase in their adoption in manufacturing and logistics. The global AGV market is expected to reach $5.5 billion by 2025, with a compound annual growth rate of 7.5%.

As these technologies continue to mature, we can expect even more benefits and efficiencies in transporting and handling materials within manufacturing facilities. The combination of AGVs and IoT has the potential to revolutionise manufacturing and drive industry innovation all over the world.

ABOUT THE AUTHORS

Dr Uros Legat is an experienced FPGA engineer with a background in academia. Uros specialises in timing systems, machine protection systems, beam diagnostic systems of particle accelerators and other big physics machines.

He is also a team lead of a group of developers passionate about developing custom high-tech systems on FPGA.

In his free time, he likes to do all kinds of sports and travel to distant and exotic destinations.

Matej Blagsic is an electronics engineer specialising in embedded software development. He enjoys learning about everything concerning emerging technologies and applying them to solve problems he is faced with. He loves tasting good food and cherishes a good dram.

Project website: https://dais-project.eu/

DAIS has received funding from the ECSEL Joint Undertaking (JU) under Grant agreement number: 101007273. The JU receives support from the European Union’s Horizon 2020 research and innovation programme and Sweden, Netherlands, Germany, Spain, Denmark, Norway, Portugal, Belgium, Slovenia, Czech Republic, Turkey.

This article reflects only the author’s view and the Commission is not responsible for any use that be made of the information it contains.

What is DAIS – Distributed Artificial Intelligent Systems?

What is DAIS – Distributed Artificial Intelligent Systems?